June 12, 2025

CRUSE: Multi-Agent Context Reactive User Experience

Introducing CRUSE: a dynamic, multi-agent user interface that understands context, adapts in real time, and moves AI beyond the chatbot paradigm

To assume that modern-day AI systems are glorified chatbots is to sell them short, but the brains of these systems are Gen AI models, which ‘think’ in natural language, and so interacting with them through chat has become our go-to.

It doesn’t have to be.

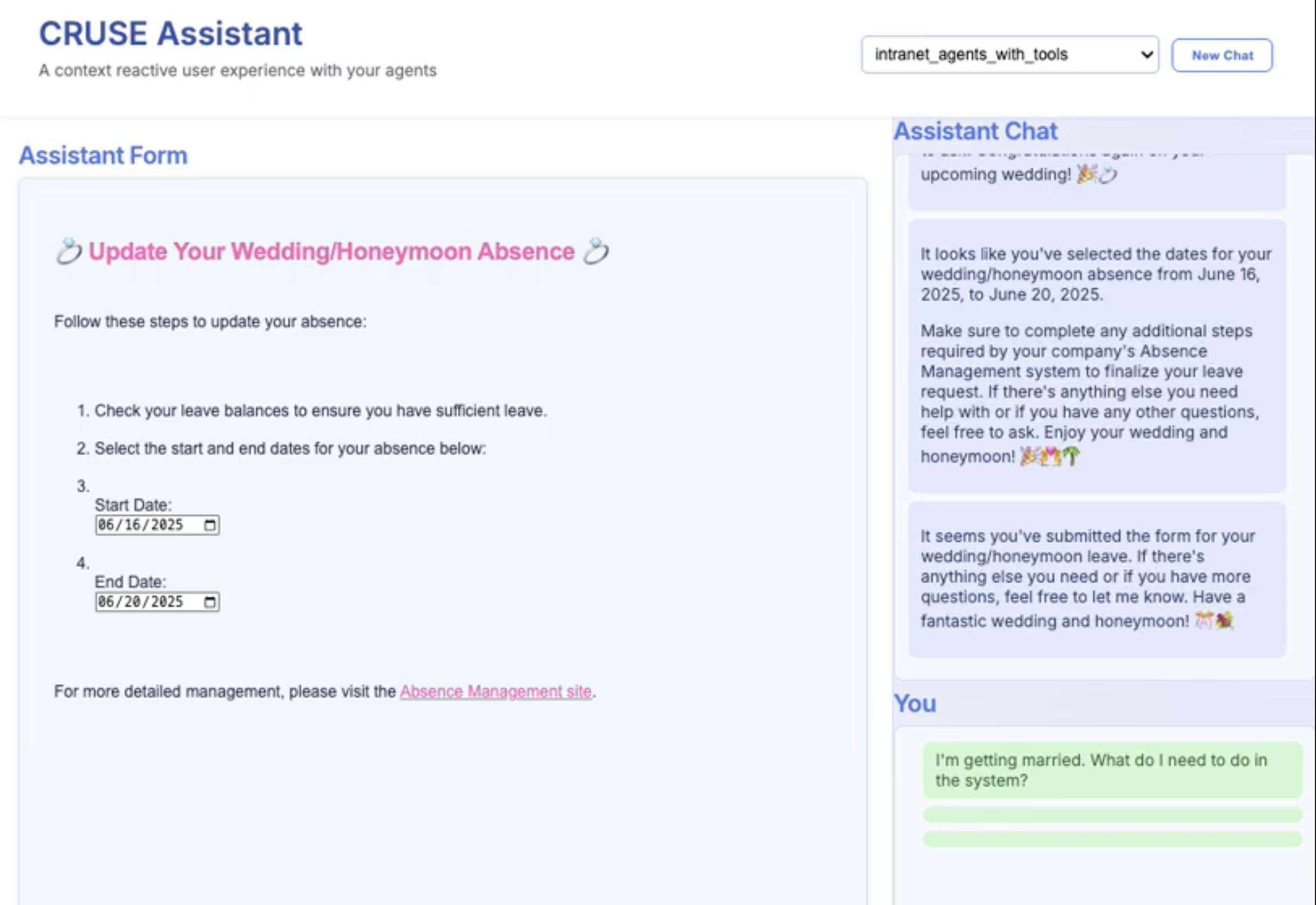

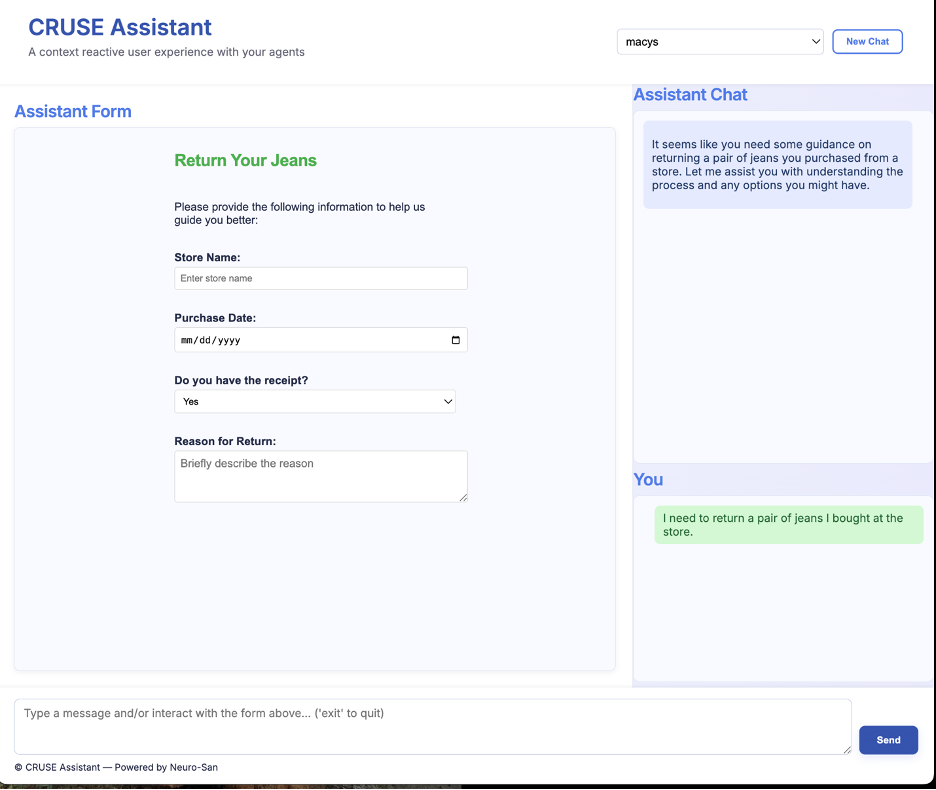

A Context Reactive User Experience (CRUSE) is one where the intent of the user is determined by the context of their interactions with the user interface at the time of issuing a command. For instance, if a user points at the image of a dog and says, “find me more!” the system should know that the user means they want it to find more images of dogs. The elements of the interface are also dynamically generated based what the system considers to be the most natural way to interact with the user. For example, rather than asking the user, “would you like me to search more the word ‘dog’, images of dogs, or either?” the system would produce a radio button with the three choices so the user can easily click on their choice.

Many years ago, as we worked on the natural language technology that ended up becoming the core of Siri, my brother Siamak and I realized that our multi-agent approach lent itself well to dynamically generating contextual user experiences. As different agents in the system raised their hand to claim a role in handling an input, their respective requirements could be conveyed up-chain, and, for those requirements that other agents could not fulfill, a UI element could be spontaneously created and collectively consolidated into an intelligent dynamic user interface.

By the same token, as the user interacted with the visual interface, the context of the UI could complement any natural language command or query they might have given the system, and the agents would then take their respective UI context into consideration as they formulated their part of the next response.

That paradigm can readily be applied in today’s multi-agent systems, bringing the user experience to life, and enriching the interactions well beyond what a simple chatbot could.

My good friend, Hormoz and I recently added a Context Reactive User Experience (CRUSE) app to Neuro SAN as an example of how such a UI can be created. The implementation also serves as a showcase of the power, simplicity and extensibility of the Neuro-SAN multi-agent platform.

To try it out, simply fire up the CRUSE app by following the instructions here:

https://github.com/cognizant-ai-lab/neuro-san-studio/tree/main/docs/examples/cruse.md

This app is a local webserver. Using a browser to open the link it provides in the console will load the CRUSE interface. You can use the dropdown at the top of the page to point the app at any agent network in your local registry and interact with the preexisting agent network using a CRUSE UI.

Zoom image will be displayed

Behind the scenes, the app is using an agent called the CRUSE agent, which formulates agent interactions as text and visual html forms. This agent dynamically connects to your desired agent network using a very useful coded tool called ‘call_agent’. The CRUSE agent uses the powerful AAOSA coordination mechanism to discover agents that claim to be able to play a role in servicing a certain user query. As the responses are consolidated back through the network of agents, they provide the CRUSE agent with what it needs to design and redesign the UI at every turn.

Feel free to extend and beautify your own version of the CRUSE app and agent. For example, the CRUSE agent is currently a single agent, but you can extend it by making it multi-agentic, say by adding an agent that contributes java script to the page, or one to pull images or dynamically create emojis depending on the interaction.

This is the beauty of multi-agent systems. You can create a multi-agent system to generate the UX front-end for your other multi-agent systems, and because the coordination mechanism is use-case agnostic, you can extend either side of this equation with very little or no changes to the other side. The power of agentic encapsulation.

Babak Hodjat is the Chief AI Officer at Cognizant and former co-founder & CEO of Sentient. He is responsible for the technology behind the world’s largest distributed AI system.