May 30, 2025

Agents as Tool

Exploring how Neuro SAN enables agents to call other agents as tools, unlocking flexible, parallel, and non‑code‑heavy multi‑agent orchestration

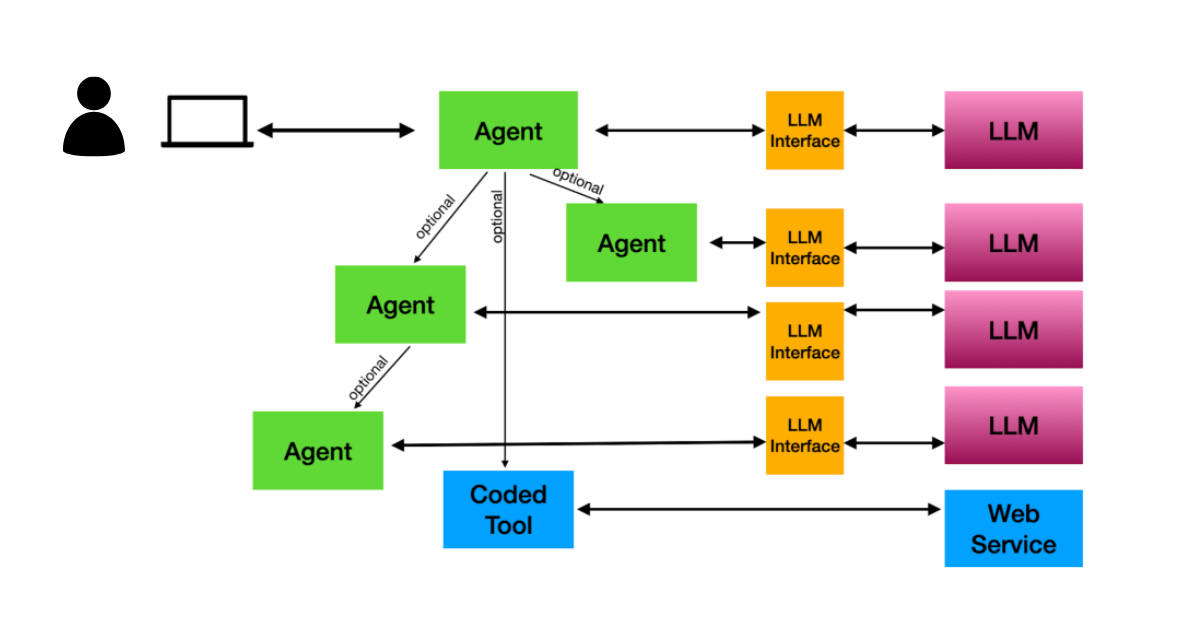

Let’s talk a little about the basics of how Neuro SAN works at a 30,000-ft level.

In general, each Neuro SAN agent network describes a list of agent descriptions that can reference each other as Tools. (I capitalize the T here because it’s a specific low-level concept in LLM toolkits like langchain, but that is beside the point.)

Most coded (read: Python) agents these days will be powered by a single LLM and its agent control loop that enables the agent magic. Agents can be given a list of Tools that they may call — but are not required to call. Traditionally these Tools are bits of Python code with clear descriptions in code in the right places. These descriptions end up being an upstream projection of what the Tool can do and what information it needs in order to be called.

Neuro SAN has some fairly unique bridging code that allows agents to call other agents as Tools, as long as they too fulfill those necessary code-y descriptions for the upstream caller.

But with Neuro SAN, you don’t really have to worry about code so much. For the most part, any Neuro SAN agent has as part of its data-only description the following items:

- A human-readable name, so other agents and people can refer to it.

- A natural-language description, which tells what the agent does

- An optional list of parameters with their natural-language descriptions, so that callers know what information they need to supply.

- Natural-language instructions that describe what the agent should do.

- An optional list of other Tool names the agent can call at its discretion to help get its job done

I really cannot emphasize enough the fact that none of the items above is code-y at all. It really is just bits of English (or your language of choice) put in the right places in a specific file format. That’s it.

In a Neuro SAN agent network description, any one agent can reference any other agent in the HOCON file by name in its Tools list. This allows for many different kinds of arrangements of agents-talking-to-agents to emerge, which I hope to talk more about in future posts like: step-by-step processes, delegative trees, recursive graphs — all described in natural language that subject matter experts can understand, not necessarily programmers.

Because each activated Neuro SAN agent described in an agent network HOCON file has its own private conversation with an LLM that does not interfere with any of the other agents in getting its specific job done, the agent network as a whole is not really bounded by any single LLM’s constraints when it comes to I/O limits on LLM tokens. In fact, Neuro SAN’s asynchronous architecture actually enables agent parallelism, so that many of these agent’s private LLM conversations can be happening at the same time whenever possible, getting your end-user their ultimate answer faster.

But the key takeaway here is that the agent network spec-ed out in a Neuro SAN HOCON file is a description of what can call what, not what will call what. What actually happens depends on the query to the agent network and the nature of the instructions therein. The real magic is: It’s the agents themselves that decide what happens.

This all enables wildly different behavior of agent networks that all use the same Neuro SAN HOCON file format and that can all reside on the same Neuro SAN server.

For more information:

Daniel Fink is an AI engineering expert with 15+ years in AI and 30+ years in software — spanning CGI, audio, consumer devices, and AI.